The MadeForOne.com newsletter provides personalization news and information straight to your mailbox. Subscribe Now!

Mass customization and personalization news

Researchers are developing a new type of surround sound in York and Sydney

2005-02-01 - York, England & Sydney, Australia Ultra-realistic surround sound is a step closer for everyone thanks to a new method that will cheaply and efficiently compute the way individuals hear things.

Currently, creating accurate 'virtual sound fields' through headphones is almost exclusively the domain of high-budget military technologies and involves lengthy and awkward acoustic measurements. The new approach eliminates the acoustic measurement step altogether and promises to produce the required results in mere minutes.

The breakthrough has been made by researchers at the University of York's Department of Electronics, funded by the Engineering and Physical Sciences Research Council (EPSRC). The researchers are working in collaboration with colleagues at the University of Sydney, Australia.

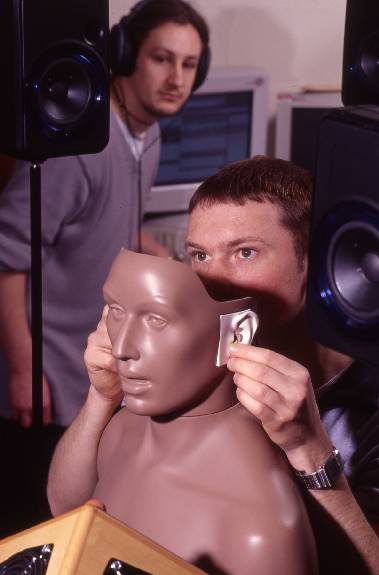

The team are now working to commercialise the idea. Tony Tew, lead researcher at York explains, "We envisage booths in the high street, like those used for passport photos, where customers can have the shape of their head and ears measured easily. The shape information will be used to quickly compute an individual's spatial filters."

Spatial filters encapsulate how an individual's features alter sounds before they reach the eardrum. The changes vary with direction and so supply the brain with the information it needs to work out where a sound is coming from. Tew's booth would record the spatial filter measurements on to a smart card, readable by next-generation sound systems. The result - sounds heard through headphones should be indistinguishable from hearing the same sounds live.

n general, the location of sounds to our left and right can be inferred from the difference in their time of arrival and intensity at each ear. This is exploited, albeit in a limited way, in stereo music recordings. However, distinguishing sounds from the front and behind is a more subtle process and relies on the features of the ear flaps (pinnae). The spatial filters created in this research will be derived with the aid of 3-D images of the pinnae.

This research is being undertaken in close collaboration with academics at the University of Sydney, Australia. They have pioneered the techniques for using the information about face shape captured by the York group to produce the personalised 'spatial filters', more usually called Head-Related Transfer Functions (HRTFs). These HRTFs can be programmed as software processes or built into microchips that can alter an electronic signal so as to mimic the effects of the head and outer ear before the signal is fed into the ear canals through a pair of headphones. The Australian Research Council funds the University of Sydney work.

The reproduction of sound first started with the use of a single speaker before progressing to stereo. Nowadays, home cinema can use six or more speakers to give listeners a feeling of being immersed in sound. However, these systems were never intended to recreate sounds that are indistinguishable from the 'live' experience. Beyond surround sound lie the developing technologies of wavefront synthesis and ambisonics, for example, which are much more capable of conveying the direction and even the size of a sound source. Both rely on arrays of loudspeakers. Generally speaking, the more loudspeakers, the better the performance, but also the greater the cost. The method being pursued at York and Sydney employs the technique of binaural sound synthesis, which seeks to recreate the illusion of sound all around the listener simply using two channels of sound, one channel delivered to each ear.

Hearing a traditional stereo signal through headphones normally gives the impression that the sounds are located 'inside' the listener's head. Personalised binaural audio is different from stereo, however, because it includes the subtle distortions to the sound caused by the effect of the head and ear shapes of the listener. As such, it is a fundamentally different way of creating accurate total immersion in a sound field compared to the use of multi-channel loudspeaker arrays.

Rapid-growth portable technologies, such as mobile communications, wearable computers and personal entertainment systems, largely depend on ear phones of one sort or another for their reproduction of sound. Ear phones are perfect for creating a virtual sound field using the York-Sydney team's method. Realism is only one benefit; the ability to place virtual sounds anywhere around the head has applications in computer games and for producing earcons (the acoustic equivalent of icons on a visual display). Next-generation hearing aids programmed with the wearer's spatial filters will be able to exploit the directional information created by the ear flaps and so help to target one sound while rejecting others.

Tony Tew says, "Our main goal is for personalised spatial filters to figure in a wide range of consumer technologies, making their benefits available to everyone."